Difference between revisions of "Consolidate Domain Decomposition"

From MohidWiki

(→Run the program) |

(→Run the program) |

||

| Line 11: | Line 11: | ||

To run the program go to the directory where the file "Tree.dat" is. Most likely you will run on a single machine, the number of Parsers must be 1, the number of Workers can be any number greater then 1. There should be a balance between the number of available cores and the number of files to join. The application parses the "MPI_*_DecomposedFiles.dat" produced by Mohid. The consolidated files are placed in the same directory where the decomposed files are. The command to run the application is: | To run the program go to the directory where the file "Tree.dat" is. Most likely you will run on a single machine, the number of Parsers must be 1, the number of Workers can be any number greater then 1. There should be a balance between the number of available cores and the number of files to join. The application parses the "MPI_*_DecomposedFiles.dat" produced by Mohid. The consolidated files are placed in the same directory where the decomposed files are. The command to run the application is: | ||

| − | mpiexec -localonly -n 1 DDCParser.exe : -n 2 DDCWorker.exe | + | * mpiexec -localonly -n 1 DDCParser.exe : -n 2 DDCWorker.exe |

== Topology == | == Topology == | ||

Revision as of 15:52, 9 March 2015

The program Consolidate Domain Decomposition uses the actor model to parallelize file processing tasks. For instance, suppose there are hydrodynamic and water quality files from 2 sub-domains:

- hyd_submod_1.hdf and wq_submod_1.hdf; and

- hyd_submod_2.hdf and wq_submod_2.hdf.

The desired consolidated files are hyd.hdf and wq.hdf, resulting form the concatenation of:

- hyd_submod_1.hdf and hyd_submod_2.hdf to produce hyd.hdf; and

- wq_submod_1.hdf and wq_submod_2.hdf for the wq.hdf

Run the program

Both Windows and *NIX systems run the same way. This application runs from the command line. To run the program go to the directory where the file "Tree.dat" is. Most likely you will run on a single machine, the number of Parsers must be 1, the number of Workers can be any number greater then 1. There should be a balance between the number of available cores and the number of files to join. The application parses the "MPI_*_DecomposedFiles.dat" produced by Mohid. The consolidated files are placed in the same directory where the decomposed files are. The command to run the application is:

- mpiexec -localonly -n 1 DDCParser.exe : -n 2 DDCWorker.exe

Topology

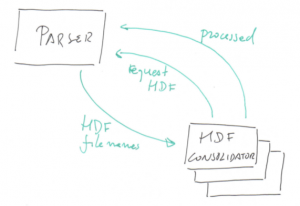

There are 2 kinds of MPI processes running:- a Parser; and

- any number of workers.

The Parser identifies result files to be consolidated. It keeps a list of jobs to be done. At the end, when all files are merged, a "poison pill" message will be broadcast notifying workers they must terminate. The list of jobs works like this:

- a Worker is idle it sends a request to the Parser;

- the Parser sends the first unfinished job to the worker and moves it from the first to the last position of the list;

- when a Worker terminates a job sends a processed message to the Parser;

- the Parser removes the completed job from the list.

This strategy for the jobs list ensures fault tolerance. Suppose a job is sent to a Worker that, for some reason, does not complete it. That job will eventually be send to another Worker. It may occur that a job is executed twice but the program is idempotent so no harm occurs...