Consolidate Domain Decomposition

From MohidWiki

The program Consolidate Domain Decomposition uses the actor model to parallelize file processing tasks. For instance, suppose there are hydrodynamic and water quality files from 2 sub-domains:

- hyd_submod_1.hdf and wq_submod_1.hdf; and

- hyd_submod_2.hdf and wq_submod_2.hdf.

The desired consolidated files are hyd.hdf and wq.hdf, resulting form the concatenation of:

- hyd_submod_1.hdf and hyd_submod_2.hdf to produce hyd.hdf; and

- wq_submod_1.hdf and wq_submod_2.hdf for the wq.hdf

Topology

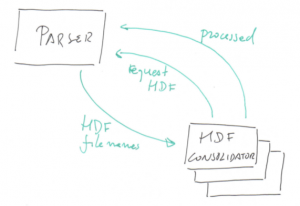

There are 2 kinds of MPI processes running:- a Parser; and

- any number of workers.

The Parser identifies result files to be consolidated. It keeps a list of jobs to be done. At the end, when all files are merged, a "poison pill" message will be broadcast notifying workers they must terminate. The list of jobs works like this:

- a Worker is idle it sends a request to the Parser;

- the Parser sends the first unfinished job to the worker and moves it from the first to the last position of the list;

- when a Worker terminates a job sends a processed message to the Parser;

- the Parser removes the completed job from the list.

This strategy for the jobs list ensures fault tolerance. Suppose a job is sent to a Worker that, for some reason, does not complete it. That job will eventually be send to another Worker. It may occur that a job is executed twice but the program is idempotent so no harm occurs...